Placed on LinkedIn: Jan 27, 2024

Keywords: conditional probabilities, AI, LSD, Multi-Agent Models, Generative

Yesterday Friday (Jan 26, 2024) I walked between my apartment and my local library, reading while I walked, as I had the prior day, Jan 25. Yesterday I first dressed much less warmly than Thursday, with thin pants, a long-sleeve shirt without a coat, and socks. However, on getting outside and feeling the sunshine, I returned to the apartment and switched into my mini-shorts, golf shirt, and sandals. Yesterday was the hottest Jan 26 on record in Washington, DC. However, as a scholar, I care more about the book than the weather. I was reading while walking an edited book by professors of philosophy titled “Handbook of the Computational Mind”.

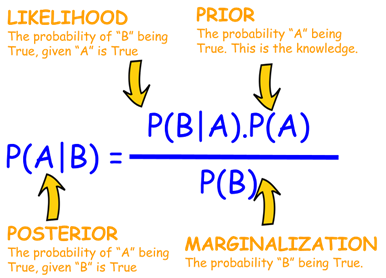

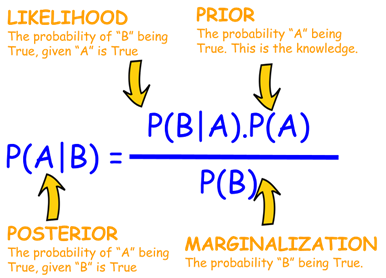

The chapter yesterday was titled "Prediction Error Minimization in the Brain" by Jakob Howhy. Jakob was explaining how hierarchical conditional probabilities (see Bayes theorem) might offer the most compelling model for both neuroscientists and cognitive scientists. The author contrasts the use of semantic primitives, neural nets, differential equations, and conditional probabilities, and for the first time I got an appreciation for the biological plausibility of networks of conditional probabilities.

My new appreciation returns me to my explorations in 1971 into the workings of LSD (see my unpublished paper “The Biochemistry and Neuropsychology of LSD” 1971. I had taken LSD and wondered how a drug could make one imagine new worlds. What a surprise to learn that what LSD did was to inhibit the inhibitory networks on our sensory input (to explain this byzantine approach you need to consider the evolution of our nervous system). Now return to Jakob’s chapter. We constantly filter out most sensory input. Selective attention is, oddly enough, implemented by keeping the senses always on but actively inhibiting the input of almost everything! And in case you have lost the relation to LSD – when you do not inhibit the senses, your mind cannot integrate the input into any real-world model and instead makes a new model that you would never confront in the real world – you hallucinate.

Neuroscientists and cognitive scientists need a model that accounts for our vast input being constantly, actively pruned, then distilled into certain features, and then integrated into complex models of reality. Take an example: you are driving down the road, your eyes catch far more information than you could ever manage, and so your eyes filters that vast information into a few edges. Those edges are connected into objects, such as a car is in front of you, as your neocortex tells you what you need to do to your steering wheel and gas pedal. Neural networks (both biological and artificial) learn this over time by manipulating parameters. But artificial neural networks are not necessarily the most useful model of the brain. Other candidates include differential equations, semantic rules, and conditional probabilities. What conditional probabilities provide is a way to filter the past conditioned on the future -- you update estimated priors based on estimated posteriors. Those estimates are simply means and standard deviations as numbers, and those numbers and amenable to gradual adjustment through learning.

After returning from my walk, I collapsed. I am so physically weak these days from progressive, irreversible radiation damage that I collapsed in bed for an hour on returning home. During the walk, I had to stop every few feet to catch my breath and hope to stop the dizziness before it made me fall to the ground. Still in aggregate, I find these reading walks therapeutic and would be psychologically sadder without them.