Living systems, as we know them on earth, are constrained by the laws of physics on earth. We can create computer simulations of life and endow them with different physics than exist on earth and have our own artificial realities. Whoever creates such a reality, is its ‘god’. For a biological living system, we might measure its success by its number of descendants. In our digital age, boundaries and reproduction transcend biology. How do we measure the contribution of an artificial living system? Some people measure success by referring to a soul and morals.

Discussion of abstract living systems are challenging to digest. To simplify, say I want to create a massively multi-player online game (MMOG). Such games include characters, called non-player characters (NPC), which people who play the game encounter. The human player interacts with the NPC to achieve rewards. As Generative AI and Multi-Agent Systems are incorporated into the NPCs of MMOGs, those NPCs will become sapient. The humans who play these games want to escape their earthly reality, but only when the artificial reality bears fundamental resemblance to their earthly reality. To make the game reflect human society, concepts from religion are embedded in the game. My inspiration for this essay comes from a book by Richard Bartle called “How to Be a God” at https://mud.co.uk/richard/How%20to%20Be%20a%20God.pdf. The rest of this essay could be considered a selective summary of Bartle’s book.

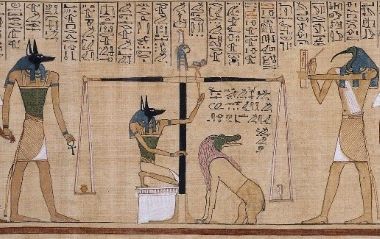

How would you implement St Nick’s scales in an artificial reality? Many online games implement scales for player characters and call it a reputation system. You could give each NPC a soul with a counter that was initialized to zero. Your software adds points for bad deeds and subtract points for good deeds. If your software allows the NPCs to judge themselves, what if their judgement is different from yours. For instance, a NPC might think a mercy killing is good, but you might not. You could tell each NPC whether its action is good or not by sending the action’s rating to the mind of the NPC as a sensory input. If you did that, then the NPC would not need to reflect. If you want the NPCs to reflect guided by your wishes, then you could appear at intervals and tell the NPCs your wishes.

Do we have moral obligations to our NPCs? Is it okay for an NPC you programmed to kill? The entities to which your morals apply are ‘morally able to be considered’ or morally considerable. In general, all sapient living systems are morally considerable, but sticky issues arise. For example, while babies lack morals, most people regard babies as morally considerable. If you draw your line of morally considerable at personhood, then non-sapiens are not morally considerable. Or is sentience your red line? If a creature can suffer, does that make it morally considerable? Do you say that sentient beings are morally considerable but sapient beings are more important – in a choice between saving a child or saving a dog, you save the child.

In our artificial reality, if NPCs are morally considerable, then this gives you their creator responsibility to do them no wrong. If you accept that your NPCs are moral beings, where would you put them in a hierarchy of importance? Are sapient NPCs in an artificial reality more important than sentient living systems in earth’s reality?

As a god, you can stop all suffering by not implementing the concept. You could give your NPCs an awareness of stress so that they can try to remove the stressor but they do not suffer. If your NPCs are soulless bits in a database that players are projecting emotions onto as they might characters in a book, then why add suffering? Suffering might help your NPCs reflect on what is right and strive to behave morally. These issues aside, a reason to implement suffering is verisimilitude. Your players experience suffering in their earthly reality. If those players go to an artificial reality which knows no suffering, that artificial reality will not seem convincing.

If you do not give your NPCs moral guidelines but have them evolve those guidelines, then will their resultant ethics conform with yours? When you create a reality, you express your ethics through the design of that reality because the nature of the reality that you have created will determine the ethical systems the NPCs subsequently develop. Your ethics are rooted in your earthly reality. Would it make sense to insist that your NPCs operate under the same ethics that you do? Are ethical systems transitive in the sense that the ethics encoded in a reality are representative of those encoded in any super-reality of which reality is a sub-reality?

This evolution of gods is relevant to you as a game creator to reflect on your position as god and how you help your game reflect your notion of godliness. How close is the character of the creator of a reality to the conceptions held by the NPCs? To what extent should the creator of an artificial reality instill in its NPCs the religion held by the creator?

The motivations for creating artificial realities depend on the reality’s target audience. You might create a reality for the benefit of:

I am worried about the progress of evolution on earth. Given the fight for resources, states war frequently. Can we build an artificial reality to interact with our reality and decrease the likelihood of catastrophic wars?

Since my goal is to help states avoid war, my interest in a MMOG is to somehow have NPCs and players that interact with states. If you were creating a game with this motivation, then would you assign the NPCs souls and morals? Could you design a game that would somehow, eventually help states stop war?

My audience for this essay is social – you! If I had a profit motive, then it would be in the social, divine, and spiritual categories. Finally, “Do your motives align with mine?”